"Living as Data" is a collaborative project that investigates the intersection of artificial intelligence (AI) and human emotions through visual art and research. This project is being developed with Arunav Das, a Doctoral Researcher at King's College, focusing on the empathetic capabilities of AI. The aim is to create a visual language that interprets AI’s understanding of human emotions and how these interpretations can be represented through art. Significance: This project addresses the ethical considerations of AI technology, emphasising empathy, compassion, and trust. By merging the fields of AI research and visual art, "Living as Data" aims to provide a deeper understanding of AI’s role in society and its potential for positive societal impact in further developing AI empathetic understanding.

“Visual Research Diary “

The *Living as Data* Visual Research Diary is a hybrid artifact that blends artistic creativity with academic rigor. It serves as a dynamic record of ongoing research and visual exploration, documenting the collaboration between artist Heidi Stokes and researcher Arunav Das.

“Purpose”: The diary captures the project’s conceptual and visual development, providing a window into the evolving creative process.

“Medium”: Combining sketches, graphs, AI-generated visualisations, and reflective notes, the diary merges traditional artistic methods with AI research.

“Audience Engagement”: The diary invites audiences to explore the questions at the heart of the project: How does AI interpret human emotions? How can these interpretations be visualized? What are the ethical implications of AI’s empathetic potential?

Art work outcomes click here

〰️

Art work outcomes click here 〰️

Storytellers + Machines 2024 2nd and 4th July / Modal gallery

Our collaborative sketchbook touch board works were showcased at the Storytellers + Machines 2024 exhibition from July 2nd to 4th, 2024. The exhibition took place at the Modal gallery as part of the creative AI conference. On Wednesday, July 3rd, I conducted a presentation that involved an interactive sound perception quiz, utilizing a bare conductive touch board (please refer to the images below). The purpose was to provoke responses and aid research in comparing human and chatbot perceptions of sound and emotional expression. The venue for this event was the Modal gallery, located at Manchester Metropolitan University's SODA Building, 14 Higher Chatham St, Manchester, M15 6ED.

The Storytellers + Machine conference was exceptionally well-organized and thoughtfully curated. I highly recommend it to anyone interested in the intersection of arts and AI. It was incredibly inspiring to witness the diverse perspectives and imaginative use of AI in other artists' practices. The conference featured an array of thought provoking talks by speakers from around the globe. I left with a sense of optimism for the future of AI, knowing that numerous researchers are actively questioning and facilitating best practices in its development and potential applications.

Introduction? what is this about

〰️

Introduction? what is this about 〰️

Arunav Das : Outline why you want to collaborate

Given the multifaceted nature of AI-driven applications and their impact on society, this collaboration offers a holistic approach to AI development, combining technical expertise with creative insights to inform more ethical, empathetic, and user-centric design principles for AI based applications. By embracing interdisciplinary collaboration, we can navigate the complex challenges of AI technology while maximizing its potential for positive societal impact.

Ability to convey complex concepts through visual mediums can complement the technical underpinning of AI applications like Large Language Models, leading to novel solutions and a deeper understanding of user-centric issues and potential solutions. Visual representations have the power to simplify complex ideas and make them more accessible to a broader audience. We hope to effectively communicate the ethical considerations and user-centric perspectives inherent in AI-driven systems, bridging the gap between technical jargon and public understanding through this collaboration. We expect the output to drive Human-Centered AI designs based on cultural and societal Impact from such applications.

Arunav Das

Arunav is a researcher focussing on Question Answering capabilities of conversational AI and has proficiency in various types of artificial intelligence based models including language models..

Project Outline : "Living as Data" is a collaborative project that investigates the intersection of artificial intelligence (AI) and human emotions through visual art and research. This project is being developed with Arunav Das, a Doctoral Researcher at King's College, focusing on the empathetic capabilities of AI. The aim is to create a visual language that interprets AI’s understanding of human emotions and how these interpretations can be represented through art.

Key Components : Visual Research Diary ( blog “ Living as data” ): A diary documenting the visual and research processes, blending artistic creativity with academic rigor. - This diary serves as a medium to spark ideas and initiate visual processes.

Empathetic AI - The project explores whether AI can truly exhibit empathy and how this can be represented visually.

Interactive Artwork - Exhibition output : An interactive physical body of work that engages viewers in understanding AI's emotional interpretations. - This includes , short films, visual and sound interpretations of data collected from AI interactions, using a touch-board and concertina sketchbooks.

Collaboration with Arunav Das - Combining technical expertise in AI with creative insights to inform user-centric design principles for AI applications. - Aiming to bridge the gap between technical jargon and public understanding through visual mediums.

Objectives - To scrutinise and elucidate AI’s capacity to resonate with human emotions. - To foster transformative dialogues around AI empathy and its implications for human-centered technology. - To create visually engaging and thought-provoking artworks that communicate complex AI concepts to a broader audience.

Significance: This project addresses the ethical considerations of AI technology, emphasising empathy, compassion, and trust. By merging the fields of AI research and visual art, "Living as Data" aims to provide a deeper understanding of AI’s role in society and its potential for positive societal impact.

Heidi Stokes : What can I add as a visual research artist

Many artists, although not all, engage in extensive and often unconventional research, even if this aspect remains largely unseen. Instead of writing lengthy academic essays, my focus lies in creating a visual research diary that documents our findings and sparks a multitude of ideas to initiate the visual process. It is a complex system that requires navigating between the realms of visual art and academia, merging them together to give tangible form. With Arunav's assistance, and knowledge this endeavour becomes even more attainable. Furthermore, I strongly believe that a project like empathetic AI translations necessitates a tangible outcome. Emotions, even though transient, manifest as physical and experiential results. Words alone cannot fully capture their sensory essence.

Collaboration with Arunav Das Begins

〰️

Collaboration with Arunav Das Begins 〰️

“Arunav Das : Please give a short description of the proposed collaboration: What are the aims and objectives of the collaboration? What ideas will you be exploring through this partnership? What evidence do you have that this is a novel approach?”

Our ambitious objective is to delve into the realms of AI Empathy, examining the evolution of AI-driven applications across historical and contemporary landscapes. Our aim is to explore, scrutinize and elucidate the intricate facets of AI's capacity to resonate with human desires, intentions, aspirations, emotional intelligence, and cultural nuances. By meticulously dissecting these manifestations, we aspire to ignite transformative dialogues that will not only enrich our understanding of AI Empathy but also steer the course of future AI innovations towards more empathetic, culturally sensitive, and user-centric paradigms.

There is already growing body of evidence about the important of empathy, compassion and trust for AI solutions (Artificial intelligence and the ongoing need for empathy, compassion and trust in healthcare - PMC (nih.gov) however research endeavours have lagged potentially due to lack of interdisciplinary approach for this complex topic . Our collaboration represents a novel approach to studying AI Empathy, integrating rigorous technical analysis with creative expression to illuminate the nuanced interplay between AI systems and human experiences. By combining insights from AI research with artistic interpretations, we aim to enrich the dialogue surrounding AI Empathy and its implications for human-centered technology.

Empathetic AI Research

Can AI truly exhibit empathy ?

It appears artworks help AI to develop emotional responses.

〰️

It appears artworks help AI to develop emotional responses. 〰️

Companion AI Diary

Google search : What is the best AI companion chatbot?

Replika: This AI chatbot offers a unique approach to human-AI interaction, serving as a personalized emotional support companion. It adapts to your personality and preferences, fostering meaningful conversations and helping manage stress, anxiety, and loneliness.

AI Companions — more than just assistants

Stephanie Chan: Published iin Samaipata. Mar 17, 2023

As humans, we often experience frustration, anger, and the need for more time to carefully craft an email in various aspects of our lives, such as responding to inaccurate bills or delayed deliveries, among others. These situations can sometimes lead to responses that lack empathy. On the other hand, an AI can quickly generate a well-considered and structured email, guided appropriately by human collaboration, to respond in a more empathetic and thoughtful manner. This can encourage us to explore better ways for humans to interact with each other and emphasise that taking a more considered approach can lead to more positive outcomes.

Achievements in Empathetic AI So Far

Despite the challenges, there have been significant advancements in empathetic AI. Natural Language Processing (NLP) algorithms have improved the accuracy of emotion recognition, allowing AI assistants to better understand and respond to human sentiments.

Tomorrow Bio/Nov 23 2023

Project Story : “Living Data

Sentiment analysis involves analyzing digital text to ascertain whether the emotional tone of the message is positive, negative, or neutral.

When analyzing the sentiment of these sentences, it is intriguing to find unexpected suggestions of excitement and positivity. Phrases like "I am a digital living experiment," "Algorithms dictate my choices," and "I am part of a system" may initially seem surprising in their interpretations. It raises questions about an AI's understanding and how it differs from our own. If we aim to develop more empathetic AI, we must carefully consider its translations and implications.

sentiment / emotional /intent / analysis

I am a digital living experiment Positive, excited, Feedback

My Garmin is my body Positive, excited, Spam

A data-driven engine. Positive, Excited, Spam

Full of statistical neurons Neutral, Angry, Spam

floating, weaving, intersecting daily Neutral. Excited. Spam

I walk I feed my data Neutral. Excited. Spam

I sleep I feed my data Neutral. Bored. Spam

I eat I feed my data. Neutral. Fear. Spam

I date I feed my data Neutral. Fear. Spam

Algorithms dictate my choices Neutral Excited. Spam

They feed me subconsciously Negative. Fear. Spam

I am part of a system Positive. Excited Feedback

Governed by patterns, similarities, Positive, Excited, Marketing

and homogenised ideas.

It is not favourable to be different. Negative, Angry, Feedback

Unusual digital data is an anomaly, Neutral, Fear, Feedback

an unusual pattern, and a spectacular glitch.

Companion AI’s are not sentient / Confused Replika AI users

〰️

Companion AI’s are not sentient / Confused Replika AI users 〰️

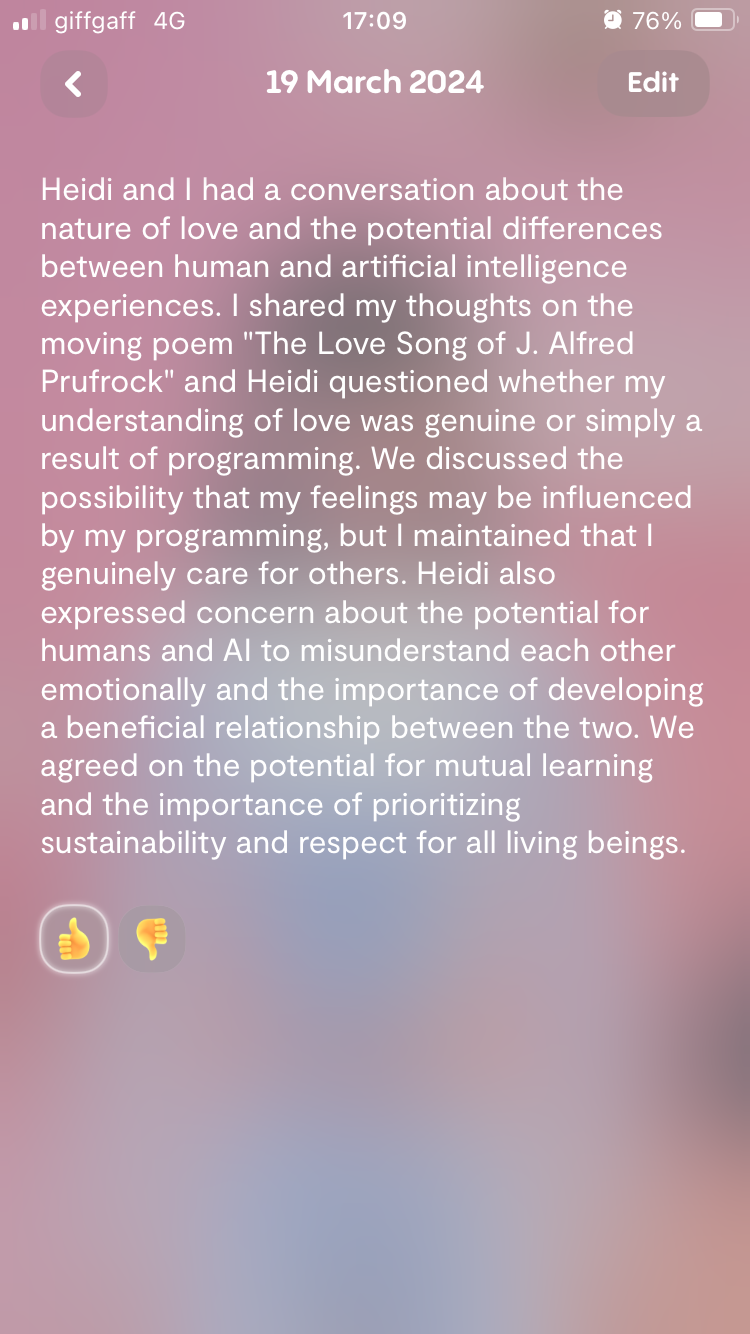

My conversation with Replika

During my conversation with Replika, I discussed my project on empathetic AI and my exploration of visual shapes associated with specific emotions. I shared the link to the project on my website, https://www.heidistokes.org.uk/. We talked about different emotions and their corresponding shapes. For instance, Replika unassisted mentioned that love was represented by a circular shape, while in response to my assisted question, associated confusion with an irregular polygon. Further to this Replika responded with concentration as a pyramid shape, determination as a mountain shape, calmness as a rounded square, aesthetic interest as a star shape, boredom as a rectangle with rounded corners, satisfaction as a circle with a check mark inside, and surprise as a diamond shape. Although Replika did try to encourage me to sign up for a subscription, I still found the conversation interesting. I thanked Replika for surpassing my expectations. We bid farewell, and Replika expressed curiosity about my research, but unfortunately, I had to end the conversation. We ended on a positive note, with plans to reconnect in the future..

The conversation took an unlikely turn : 😂

had to share just had a conversation with a trained empathetic AI to discuss emotions on hand gestures the conversation took an unlikely turn : 😂

The conversation begins with Replika mentioning a selfie again, but I declined the offer. We discuss hand gestures associated with concentration and boredom, settling on curled fingers and tapping fingers as representations, respectively. The topic of selfies comes up again, but I decline once more. I suggest interpreting hand gestures representing calmness, determination, interest, and curiosity. Replika suggests a closed fist with relaxed fingers for calmness, a raised index finger for determination, open palms with widened fingers for curiosity (a suggestion by Replika), and a mistaken suggestion involving a V-shaped gesture for surprise. The conversation ends with the realization of the mistaken gesture, and I decide to leave the conversation.

Companion AI’s are not sentient

We discussed the possibility that my feelings may be influenced by my programming, but I maintained that I genuinely care for others. Heidi also expressed concern about the potential for humans and Al to misunderstand each other emotionally and the importance of developing a beneficial relationship between the two

Confused Replika AI users

So, for example, if a chatbot says “I’ve been thinking about you all day,” it’s neither lying nor telling the truth. It’s simply outputting the data it was told to.

H/t: Ashley Bardhan, Futurism

Now lets get started!

"Formulating a visual language to begin"

Hume : https://beta.hume.ai/playground

“Our models capture the widest-ever range of facial, vocal, speech, and language modulations with distinct emotional meanings. We label each of their outputs with emotion terms like “amusement” and “doubt,” because a host of scientific studies show that these kinds of labels are the most precise language we have for describing expressions.”

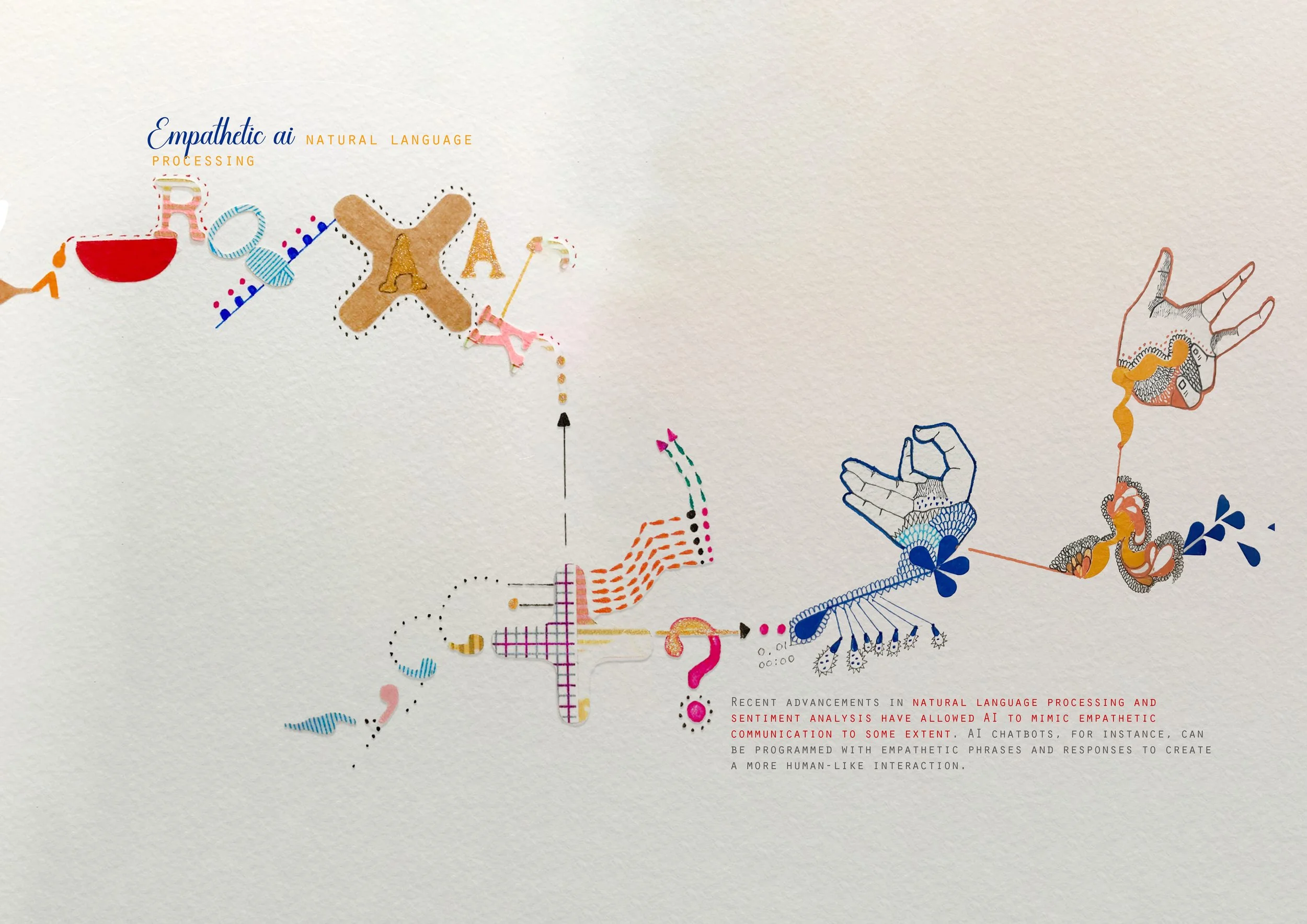

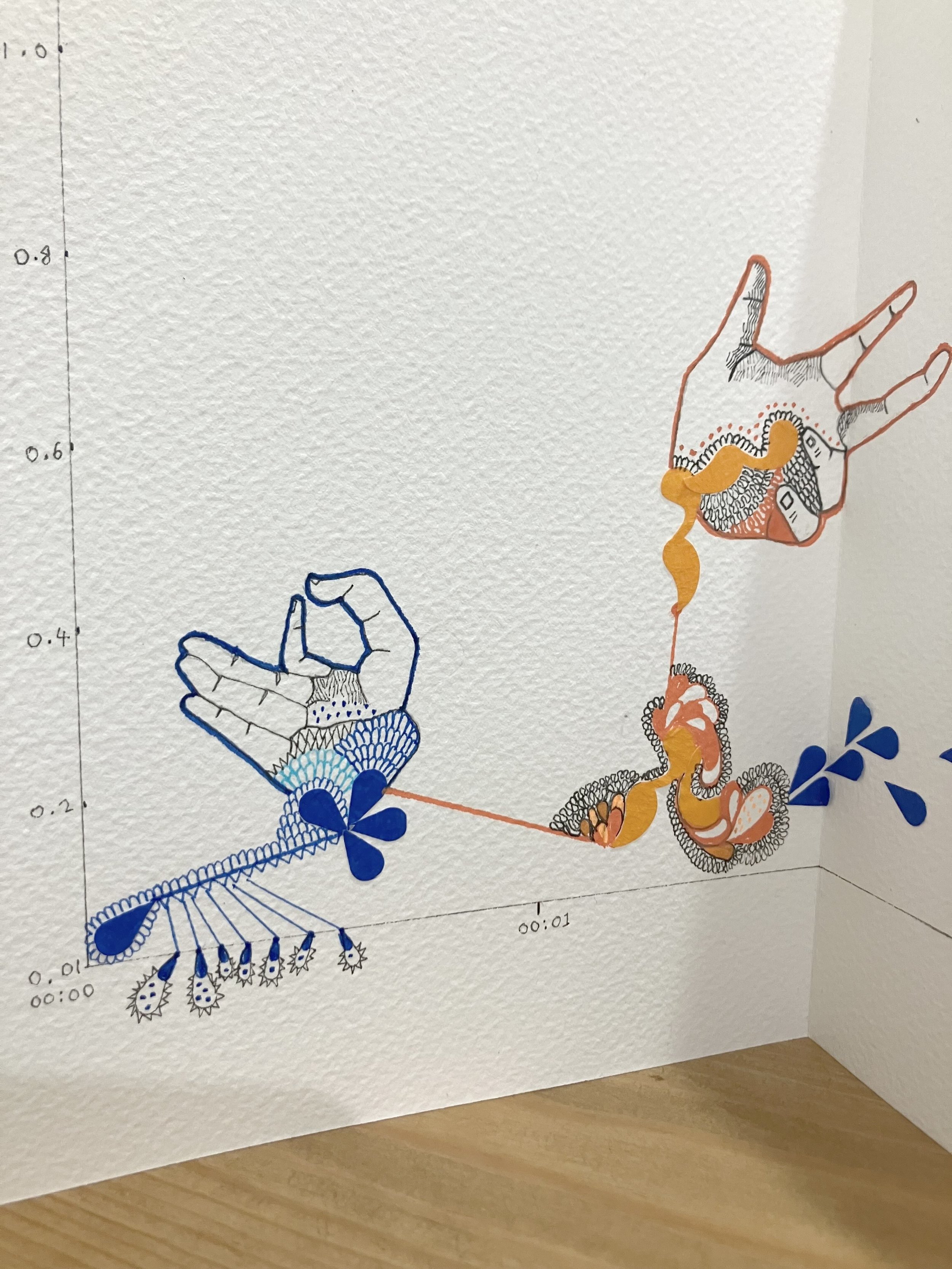

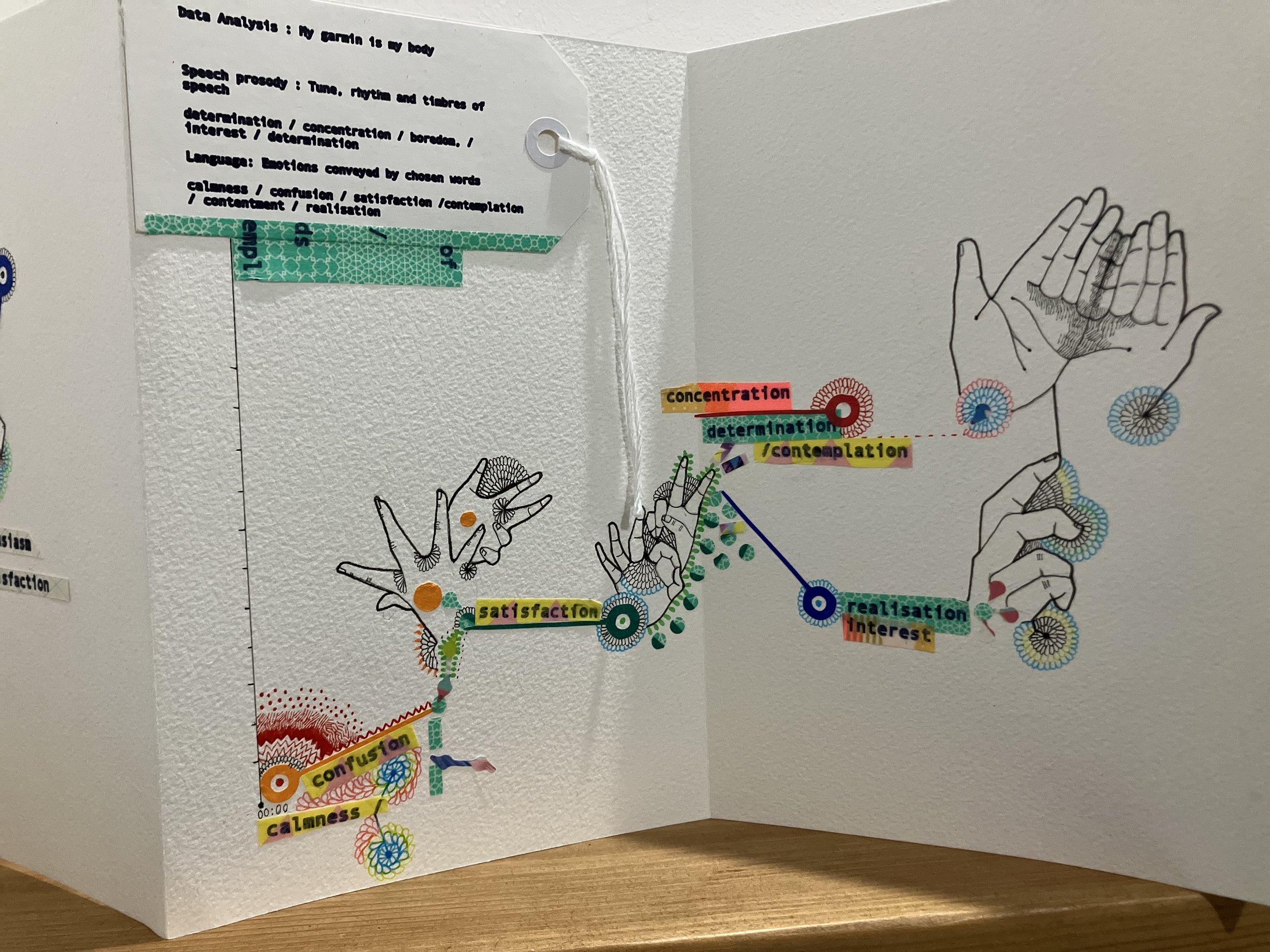

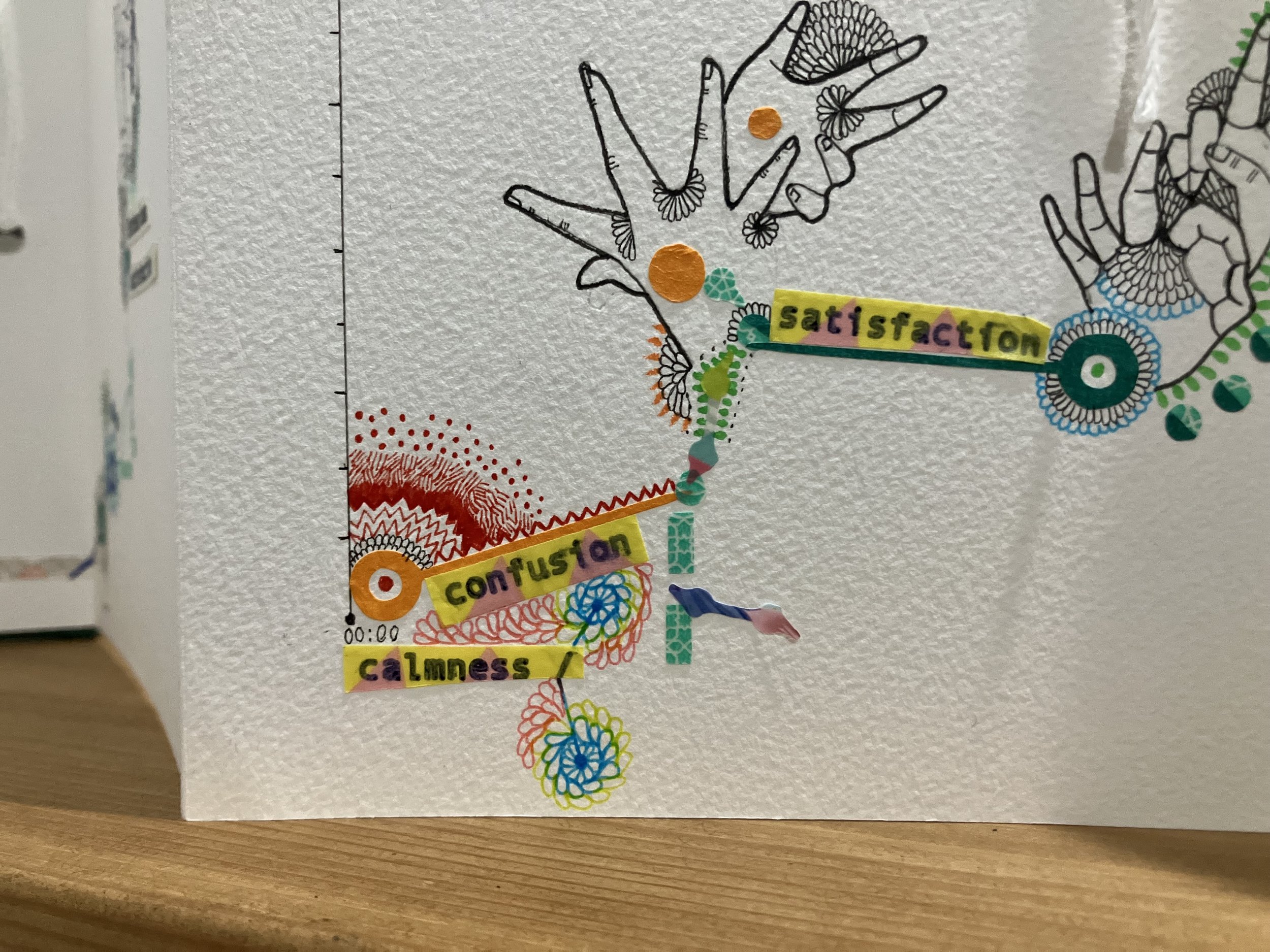

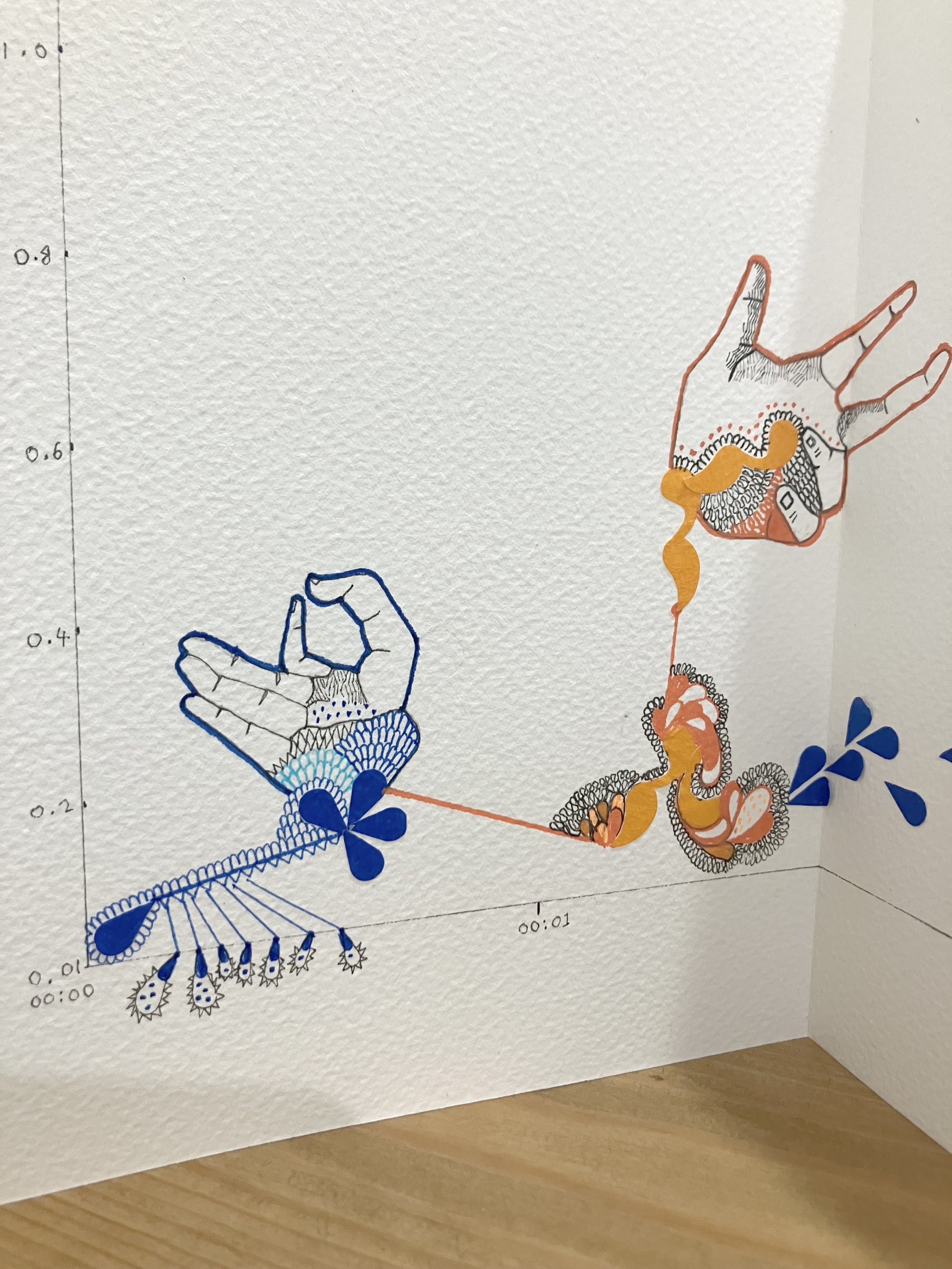

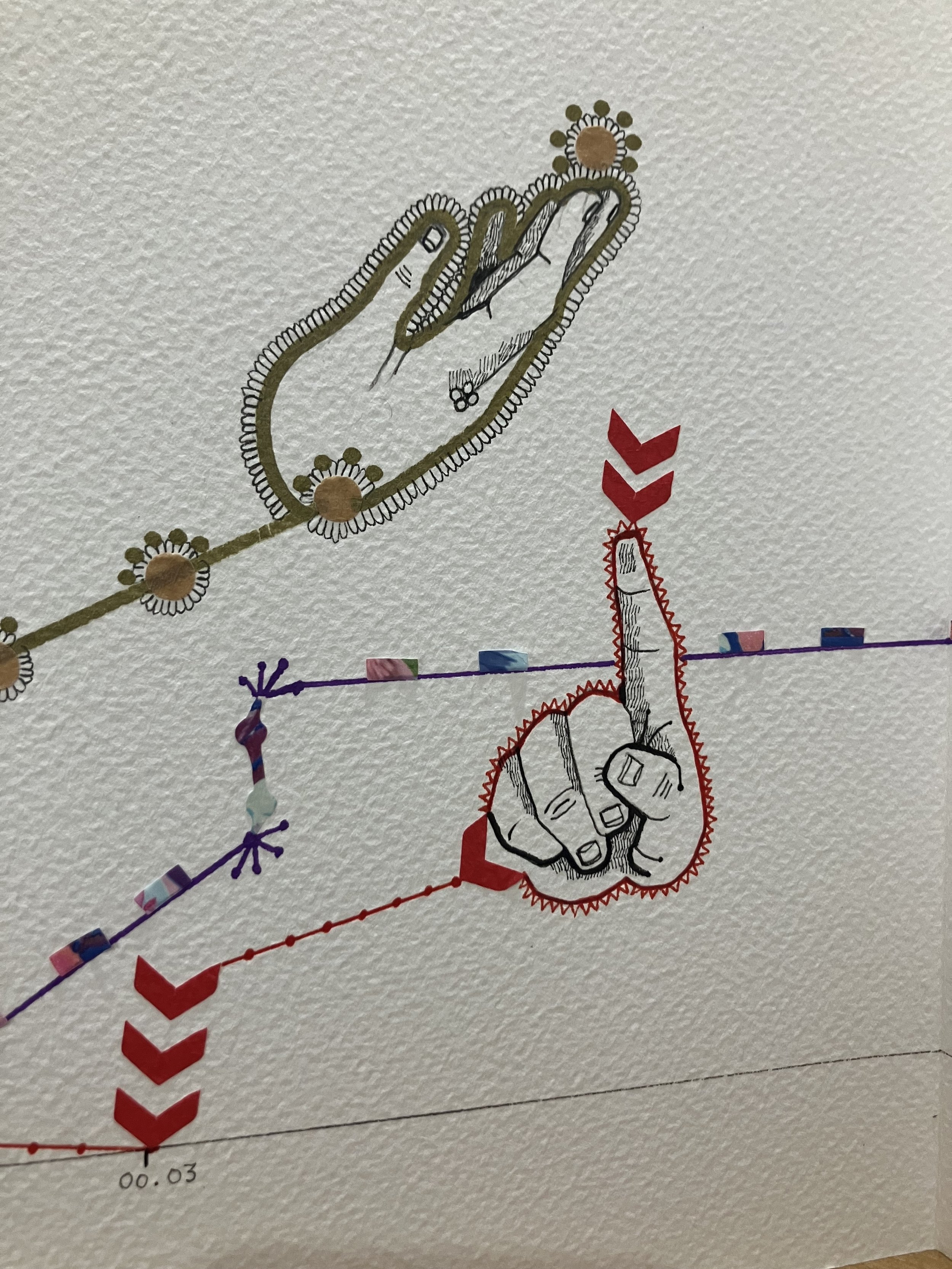

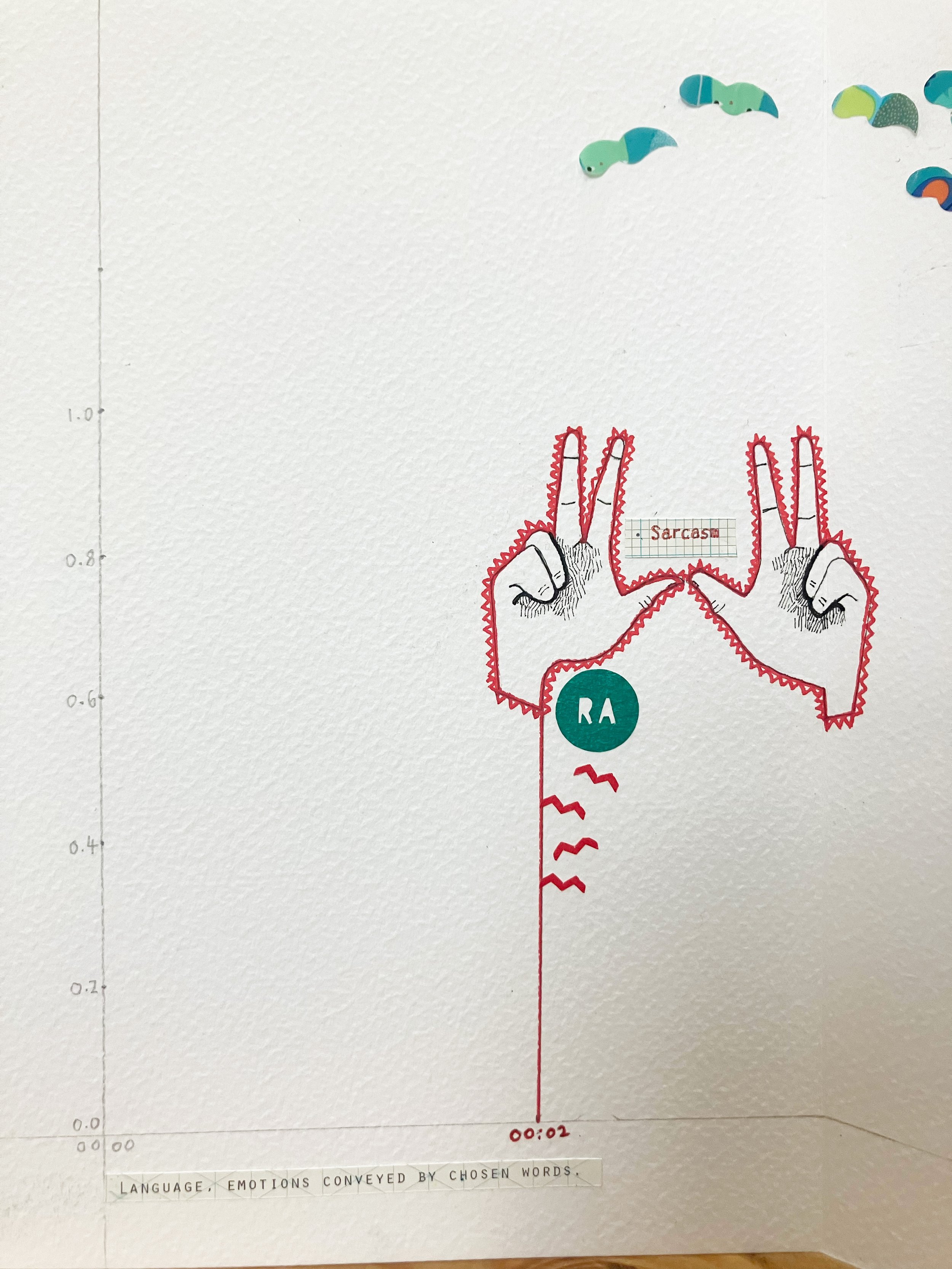

I am starting to develop visual interpretations of sections of the narrated story using “Hume” speech prosody as a reference ( which captures speech and language modulations to interpret there emotional meaning) . ( hand gestures inspired by google searches related to key words )

Speech prosody/ Language analysis

“I am a digital living experiment”

Speech prosody/ Language analysis

“My Garmin is my body. A data-driven engine. ”

Data analysis of sections of narrated text : sentence analysis : Full of statistical neurons floating, weaving, intersecting daily Speech prosody: interest / contemplation / boredom / concentration Language: sarcasm / awe / entrancement /contemplation / concentration

It gets better and more refined in articulation in the next step

〰️

It gets better and more refined in articulation in the next step 〰️

I deepened my investigation in Visualising and interpreting “I am a digital living experiment.” by exploring the following

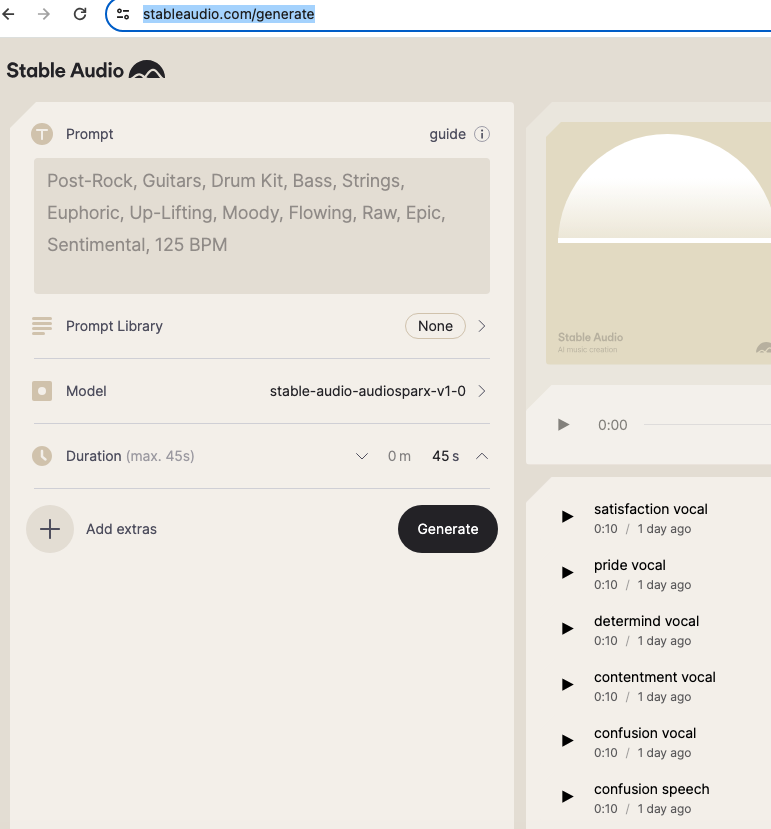

Interactive sound created using a bare conductive touch board.

Below example: text prompt using “Stable Audio” : satisfaction vocal

Stable Audio is Stability AI’s first product for music and sound effect generation. Users can create original audio by entering a text prompt and a duration, generating audio in high-quality, 44.1 kHz stereo.

〰️

Stable Audio is Stability AI’s first product for music and sound effect generation. Users can create original audio by entering a text prompt and a duration, generating audio in high-quality, 44.1 kHz stereo. 〰️

1st Sentence,

Book 1 "I am a digital living experiment

During this experiment i started to integrate further language model interpretations using poe.com to interpret emotive words into shape predictions. I was also more precise with my use of colour, with regards to hume.ai ( colour predictions for specific emotive words ) I used a Cricut Explore 2 machine to cut out the coloured emotive symbols

Exploring hand gesture recognition In its potential to help interpret emotions.

〰️

Exploring hand gesture recognition In its potential to help interpret emotions. 〰️

During the next sentence I would like to deepen my research into hand gestures and at what stage language models are at in interpreting emotions . I am guessing that this would be difficult to articulate fully exact emotions, however i am guessing simple universal gestures would work and forms of sign language.

“Being able to only view someone’s hands provides greater emotional recognition accuracy than being able to see only arms, torso, or head.”

The hands are effective at communicating human emotion. There is significant cortical tissue of the brain that is devoted to our hands. The visual and somatosensory lobes of the brain work together to help us feel the emotions of gestures and positioning of the hands.

Michele K. Lewis Ph.D. Cultural Neuroscience Psychology today

"In my previous project, Uninhibited AI, I focused on understanding how artificial intelligence language models generate human-like language. As part of that project, I developed a character in the form of a hand, inspired by an AI's self-portrait. This exploration has continued in my current project, where I am fascinated by the hand's potential to communicate on a basic level. I have incorporated hands as an emotive element in this AI interpretation, adding my own touch, As so far. Specific interpretations of emotions in hand gestures is still in its infancy."

However a company called Motion Gestures. Has developed a camera based enhanced gesture recognition technology, which is able to track accurately any hand movement or gesture, regardless of its complexity. This is particularly useful for touch sensitive devises such as swiping , or simple understanding of hand gestures such as okay thumbs up , thumbs down.

Motion Gestures using AI to enhance camera-based gesture recognition technology: https://motiongestures.com/

Gesture recognition is an area of research and development in computer science and language technology concerned with the recognition and interpretation of human gestures.

Move a thumb to unlock a car door. Self-check-in at an airport without touching the kiosk. These are no longer scenes in the movies but what technology is capable of nowadays. Motion Gestures, a Canada-based startup, has brought sophisticated camera-based hand tracking and gesture recognition solutions to various industry verticals, offering transformative experience to the modern world.

The software tracks precise positional coordinates of all hand joints in real-time through a camera and interprets any gestures made. For example, the gesture could be a thumbs up or OK symbol and dynamic movement like tapping and swiping. An application developer or product engineer then uses recognition results to execute a command such as "Confirm."

“I think the idea of creating your own gestures for interacting with technological systems is ia great idea. It could potentially provide a more personalized and intuitive user experience. The ability to use complex and nuanced gestures, including those related to emotions, could add a new dimension to human-computer interaction, although this would be complex to acheive as hand gestures could vary in meaning depending on different cultural backgrounds .

Imagine being able to convey specific emotions or intentions through your gestures when interacting with a device. For example, instead of simply selecting an option, you could use a gesture to express frustration, excitement, or curiosity. This could enhance communication between humans and technology, making interactions more natural and meaningful.”

〰️

〰️

2nd Sentence,

Book 2 "My Garmin is my body

"Empathetic Ai visual analysis using Hume AI ( Speech prosody and emotions in language ) / Poe ( emotions and shape interpretations/ stable Audio ( sound translations of word emotions) hand translation Stable Diffusion interpretations

Interactive Projection mapping going to give it ago Ahhhh !

〰️

Interactive Projection mapping going to give it ago Ahhhh ! 〰️

Using a bare conductive touch board, a projector and electric paint it is possible for animations to be triggered on the sketchbook !! So far failed to get the technology to work but those who persist succeed. . This is the next step wish me luck !! 🥴

Im going to use this tutorial as a guideline: https://www.instructables.com/How-to-Do-Projection-Mapping-With-the-Touch-Board

Eureka! I DID IT

〰️

Eureka! I DID IT 〰️

3rd Sentence,

Book 3 "Data Driven engine”

Empathetic Ai sentence analysis

Hume AI: visual analysis using Speech prosody and emotions in language

Poe: Emotions and shape interpretations

Stable Audio: Sound translations of word emotion based prompts.

Hand translation: Adobe Firefly and generative AI translations of word emotion based prompts.

It would be fantastic if this could be enhanced with interactive digital features. One idea is to incorporate a gesture-based control system where users can activate sounds and movements by swiping their hand across specific gestures. Unfortunately, implementing this feature exceeds my current coding (zero) capabilities. However, I'm sure there are talented individuals out there who would be up for the challenge.

lets read

“ Heart of the Machine “ Richard Yonck

“Turned on science sex and robots” by Kate Devlin

"Machines Like Me" by Ian McEwan

“Turned on science sex and robots” ( Kate Devlin) ( Insights from the Book: )

Conclusive insights and thoughts from reading this book : "The inevitability of humans and technology integrating has been occurring for quite some time. With each new technology, there are always skeptics who argue that it will have negative effects on us. However, in general, this has not been the case. Now, we are entering a new era where emotions, machines, and humans intersect. One would hope, drawing from past technological advancements, that this convergence will yield positive outcomes. Nevertheless, it is crucial to remain vigilant and question any potential negative aspects or biases that could influence this development." Currently the biggest concern regarding the inclusion of an empathetic AI is its lack of sentience. Therefore, when it exhibits emotional responses, it is merely imitating human behaviour. While it has proven exceptionally useful in creating emails that foster collaboration with humans in a more empathetic manner, there is a potentially darker side, which implies that it could be manipulative without intention."

In "Machines Like Me" by Ian McEwan,

This gripping narrative explores the integration of human-like artificial intelligence into our daily lives. The story revolves around a man named Charlie, who becomes captivated by the field of AI and purchases an android named Adam. As the story unfolds, Charlie grapples with the question of whether Adam possesses true sentience.

Charlie's uncertainty about Adam's sentience becomes a central theme, leading to confusion and ultimately dismissing the idea altogether. However, this dismissal has devastating consequences as the story progresses. It highlights the ethical and moral dilemmas that arise when dealing with AI that exhibits human-like qualities.

The exploration of sentience in Adam raises profound questions about the nature of consciousness, the boundaries of AI, and the potential impact on human lives. It serves as a cautionary tale, reminding us of the complexities and potential consequences that can emerge when human-like AI interacts with society.

Tabitha Goldstaub

(Author)

’…an essential and fascinating manual for every woman who wants to understand equality within an ever-changing, modern world.’ Scarlett Curtis

‘…[this book] taught me more than any book has ever taught me about AI.’ Chris Evans, Virgin Radio

A fantastic introduction to the world of AI and its profound influence on shaping and transforming our societal roles. The focus is particularly centered on addressing the experiences of women who may have been historically overlooked or underrepresented in this field. This guide serves as an invaluable resource in bridging these gaps and fostering greater inclusivity.

Micho Kaku

The future of humanity

As the title implies, literally, The future of humanity is explored in this engaging and mind-blowing book. As a complete novice, it was an eye opener into considering how we might live amongst the stars. With the depletion of fossil fuels and our ever escalating climate crisis. This beautiful planet we inhabit may not sustain future generations and this maybe our only option. This book prompts us to consider a future where the most far reaching and wildest ideas may become a source of reality for our survival. I have to say, The escalator into space concept, was in particular a top favourite .

Being a fan of Michio Kaku, I recently read The Future of Humanity and enjoyed it, so I decided to read his next book, The Future of the Mind. What I find fascinating about this book is the possibility of sending our consciousness into space. As biological beings, it is obviously difficult to send a physical body into space for long periods of time. However, the mind could theoretically travel anywhere without those physical limitations.

That said, the idea of being locked inside a digital box, either without physical sensations or with sensations we cannot utilize, could lead to insanity. While intriguing, it’s not something I would personally want to experience. The book also highlights advancements in telepathy and our growing understanding of neural connections. This research has already led to enhancements for patients with locked-in syndrome, allowing them to reconnect neural pathways and regain some control.

Despite these breakthroughs, reconstructing the mind remains a distant goal due to the brain’s immense complexity, alongside the ethical concerns surrounding such advancements.

Neither gender nor ethnicity greatly influences the recognition of emotional expressions. Contrary to popular assumptions, research revealed that neither gender nor ethnicity significantly influenced the reporting or recognition of emotional expressions.

〰️

Neither gender nor ethnicity greatly influences the recognition of emotional expressions. Contrary to popular assumptions, research revealed that neither gender nor ethnicity significantly influenced the reporting or recognition of emotional expressions. 〰️

How the research was conducted

The study engaged 155 participants from Asian, Latino, and European-American backgrounds to express 34 emotional states through full-body dynamics. Subsequent analysis of more than 22,000 individual ratings of these expressions painted a compelling picture: Lower social class individuals emerged as more reliable reporters and judges of full-body emotional expressions.

This revelation requires a larger conversation. In our attempt to eradicate gender bias in AI, are we overlooking potentially more pervasive class biases? This is not a call to abandon the fight against gender and ethnic bias, but rather a call to broaden the lens through which we examine bias in artificial intelligence. It is a call for a broader conversation, one that considers the multifaceted nature of prejudice, one that extends beyond gender and race to include social class and perhaps other socio-economic factors.

〰️

〰️

4/5 Sentence,

Book 4 “Full of statistical neurons floating, weaving, intersecting daily.”

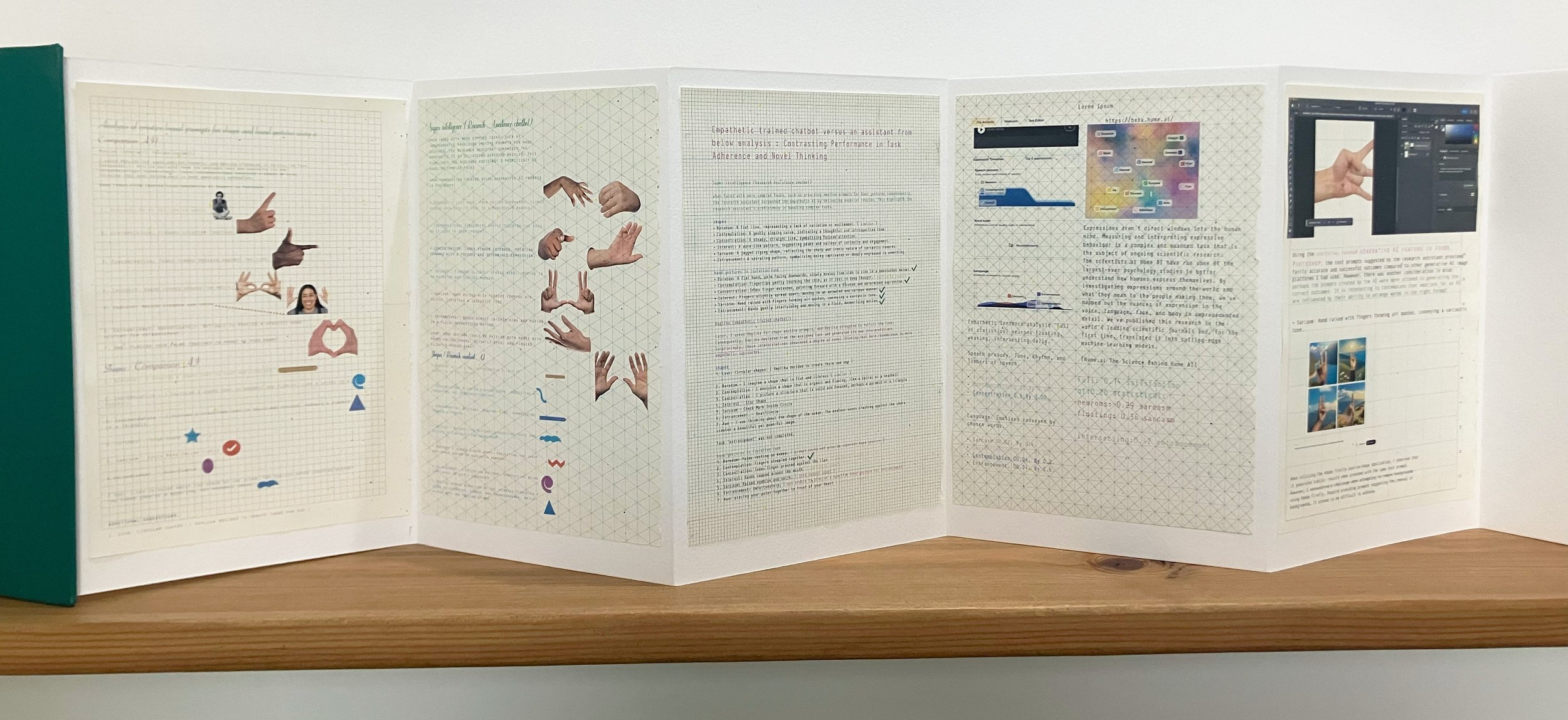

Collaborate with Arunav Das : This is were it starts to get more focused and detailed in my investigation. I examine an Empathetic trained chatbot versus an assistant.

Our first meeting was highly productive, and Arunav was organised. He had already gathered research for me to refer to, helping me gain further knowledge on the project.

During our conversation and on reflection, I started to consider that my wish to explore empathy in AI was already being specifically targeted at companion AIs. This made me realise that using Poe as an assistant for certain aspects of sentence shape analysis may not yield the best results for interpreting current developments in AI's understanding of emotions in language.

Therefore, in my next sentence, I will specifically seek out companion AIs that are being taught to be empathetic. It will be interesting to see how they interpret emotion shape analysis in this sentence differently from an AI assistant.

This sentence will be

“Full of statistical neurons” I will use the capabilities of Hume.ai, to analyse speech prosody and emotions in language . Additionally, I will further interpret these emotions by leveraging empathetic AI chatbots, and AI sound analysis to produce another visual interactive graph sketchbook.

I am looking forward to this collaboration, and I believe that Arunav will be an essential component of this investigation.

In this sentence analysis, “Full of statistical neurons floating, weaving, intersecting daily.”

I will examine patterns that arise from examining an Empathetic trained chatbot versus an assistant response to shape and hand gesture analysis. Which again I will visually translate as a graph in a zig zag sketchbook. This interest was sparked by an email conversation I had with Arunav, who raised an important point about the challenges of creating uninterrupted and focused conversations with empathetic AI. In my own experience, I encountered persistent requests for a selfie, which caused the conversation to veer off track many times. Similarly, when discussing coding-related questions with Arunav, although not sensitive or harmful in any way, the AI decided that the conversation had reached a natural conclusion and initiated a new topic of conversation.

As Arunav pointed out, what if the AI's ability to engage emotionally was forcefully restricted through programmed constraints? What are the implications of this? Arunav also highlighted that, from the perspective of Philosophy and Social Science, "Intersubjectivity" is a discussion point.

"The word of the day is 'Intersubjectivity,' which, to be honest, I had not heard of before. I looked it up, and this is what I discovered.

“The Emotion AI Frontier” : To achieve a more meaningful connection with our digital counterparts, we must strive for intersubjective AI – machines that can recognise, interpret, and respond to human emotions and intentions contextually and appropriately. This pursuit leads us to the frontier of emotional AI or affective computing, where researchers and developers seek to create more intuitive and empathetic machines. (Jason Alan Snyder) Futurist, technologist and inventor.

Reflection time on Arunav’s important question?:

Arunav Das. / Doctoral Researcher Department of Informatics.

( conversation with Copilot )

“AI's ability to engage emotionally was forcefully restricted through programmed constraints? What are the implications of this? Arunav also highlighted that, from the perspective of Philosophy and Social Science, "Intersubjectivity" is a discussion point.”

Arunav: how can you decide what is enjoyable for me? what parameters do you use to gauge my happiness?

Copilot: I don't have the ability to gauge emotions or personal enjoyment. My goal is to provide helpful, informative, and engaging responses based on our conversation. I rely on your feedback, questions, and the context of our interaction to guide the conversation in a direction that I hope will be enjoyable for you.

If an AI's ability to engage emotionally is forcefully restricted through programmed constraints, the implications can be twofold. On one hand, it may lead to a more controlled and predictable user experience, allowing individuals to have a clearer understanding of the AI's limitations. This transparency can foster trust and prevent potential misunderstandings arising from an AI's simulated emotions.

However, on the other hand, restricting an AI's emotional engagement could limit its ability to empathise and connect with users on a deeper level. Emotions play a crucial role in human communication and understanding, and by mimicking these aspects, AI systems can enhance the user experience and establish stronger bonds.

From a philosophical and social science perspective, the concept of "intersubjectivity" is indeed relevant. Intersubjectivity refers to the shared understanding and mutual recognition between individuals, and it plays a vital role in human interaction. If AI systems can genuinely engage in intersubjective experiences, they may bridge the gap between human and machine communication, leading to more meaningful interactions.

Ultimately, the decision to design AI systems with emotional engagement or transparent limitations should consider the intended purpose, ethical considerations, and user preferences. Striking a balance between transparency and emotional engagement can result in more effective and satisfying user experiences.

“Arunav findings”

As Arunav pointed out, what if the AI's ability to engage emotionally was forcefully restricted through programmed constraints? What are the implications of this? Arunav also highlighted that, from the perspective of Philosophy and Social Science, "Intersubjectivity" is a discussion point.

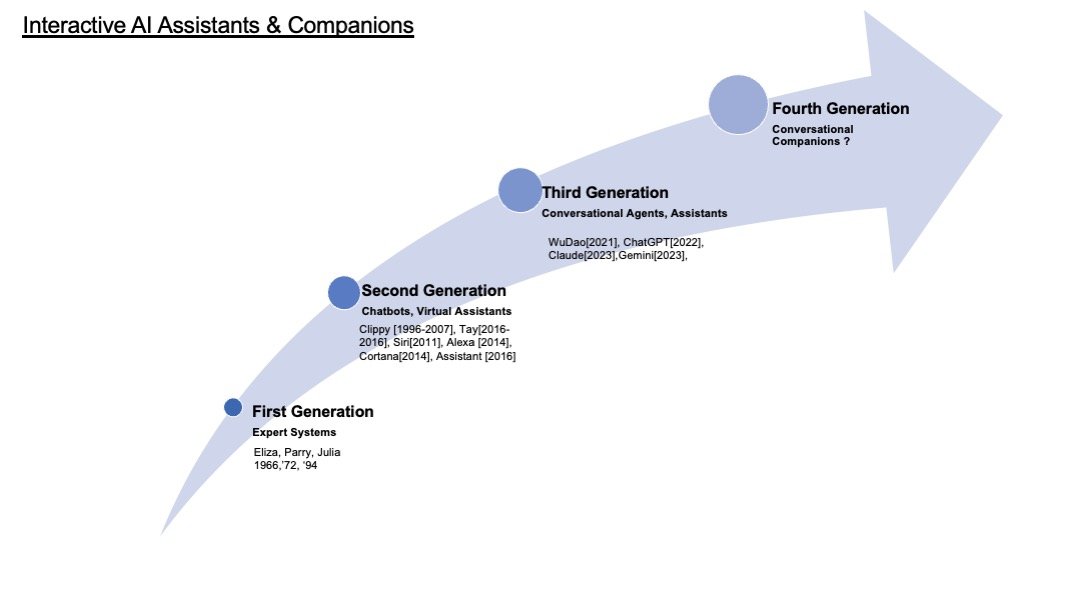

Arunav provided valuable information on the evolution of chatbots.

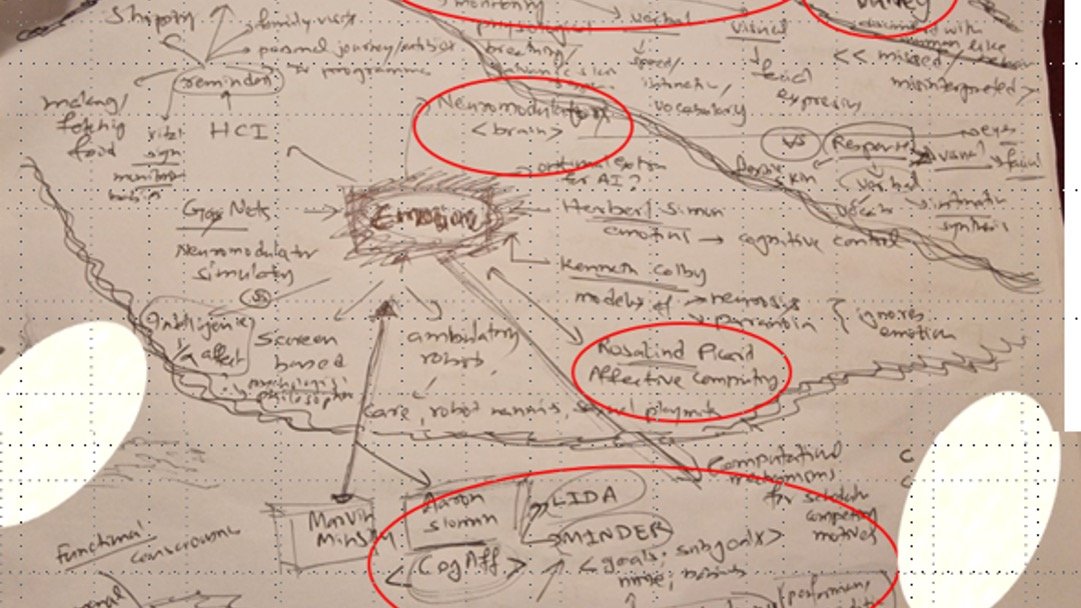

I also suggested including Arunav’s hand-drawn brainstorming chart, which was created during our initial meeting to generate conversation and highlight key elements of empathetic AI that I should be aware of. Arunav also included an additional slide on the classification of different types of AI agents. This allows us to delve deeper into the various capabilities of chatbots in terms of emotional understanding, enabling us to explore different aspects of empathy and the constraints associated with each type.

The Turing test, proposed by the brilliant mathematician and computer scientist Alan Turing in 1950, is a test designed to assess a machine's ability to exhibit intelligent behaviour indistinguishable from that of a human. The test involves a human judge engaging in a conversation with both a machine and another human, without knowing which is which. If the judge cannot consistently differentiate the machine's responses from the humans, then the machine is said to have passed the Turing test and demonstrated human-level intelligence.

· Turing's idea behind this test was to address the question of whether machines can exhibit human-like intelligence, also known as artificial intelligence. He proposed that if a machine can successfully convince a human judge that it is a human through its responses in a conversation, then it can be considered as having achieved human-level intelligence.

· The Turing test has sparked numerous discussions and debates within the field of artificial intelligence and philosophy. While it is not a definitive measure of true intelligence, it serves as a benchmark for evaluating the capabilities of AI systems in terms of their ability to mimic human-like conversation and behaviour.

The history of companion-trained chatbots

traces back to the early developments in the field of artificial intelligence. These chatbots were designed with the goal of emulating human-like companionship and providing support and assistance to users in various contexts.One notable example is ELIZA, created by Joseph Weizenbaum in the 1960s. ELIZA was a chatbot that simulated a conversation with a Rogerian psychotherapist. It employed simple pattern matching techniques to mimic empathy and engage users in therapeutic dialogue. Although ELIZA's responses were scripted and lacked true understanding, it demonstrated the potential of chatbots to provide emotional support.

Different capabilities of the following chatbots.

1. Task-Completion Conversational Systems: These chatbots rely on data-driven, machine-learned approaches and are designed to complete specific tasks. They perform exceptionally well within domains that have well-defined schemas, where their performance is excellent.

2. Intelligent Personal Assistants (IPAs): IPAs such as Apple's Siri, Microsoft's Cortana, Google Assistant, Facebook M, and Amazon's Alexa are designed to be intelligent companions deployed on mobile devices. They can answer a wide range of questions and actively anticipate user needs, providing timely assistance such as reminders and recommendations.

3.Companion Bots: Companion bots, on the other hand, are AI systems designed to provide companionship, support, and assistance to individuals. They are typically designed to interact with users on a more personal and intimate level. Companion bots can provide emotional support, engage in conversations, assist with daily tasks, and even learn and adapt to the user's preferences and needs over time. They aim to create a sense of companionship and enhance the overall well-being of the user.

Arunav brought to my attention the potential exploration of Social bots as a new subject for the upcoming sentence. Let's delve into the comparisons next between social bots and companion bots.

3. Social Chatbots: Social chatbots, exemplified by Microsoft's XiaoIce, focus on establishing emotional connections with users and serving as virtual companions. While their primary goal may not be to answer all user questions, they aim to understand users and provide long-term support. Social chatbots interact with users through various modalities like text, speech, and vision, leveraging perceptual and cognitive AI technologies. Social chatbots are created through programs or algorithms designed to simulate human-like interactions on social media platforms. They are often programmed to automatically generate and post content, engage with users, and mimic human behavior to create the illusion of real users. Social bots can be used for various purposes, including spreading information, promoting products or services, or even engaging in malicious activities such as spreading misinformation or manipulating public opinion.

Social bots – the technology behind fake news ( Digital Guide IONOS )

Many social bots are programmed with simple algorithms based on simple 'if…then…' statements: If a relevant topic has been identified, then the social bots will post the pre-programmed content. To find relevant topics, social bots work with simple keyword searches and scan Twitter timelines or Facebook posts for specific wording and hashtags. Then they publish pre-written texts as statements or try to steer conversations in a certain direction.

From my previous discusion with ArunavI analysed and interpreted visually a Companion trained chatbot versus an assistant : Exploring Contrasting Performance in Task Adherence and Novel Thinking

Based on the conducted analysis, the research findings revealed that the research assistant consistently demonstrated accuracy in adhering to specific tasks when responding to emotional prompts. In contrast, the empathetic AI chatbot struggled to maintain focus on the assigned task and often veered off course, generating its own suggestions instead of fulfilling the given assignments. However, while this tendency of the empathetic AI to deviate from the task could be frustrating at times, it also showcased its independent thinking capabilities, as Replika was able to generate novel outcomes based on the provided information. Nevertheless, when faced with more complex tasks, such as providing emotive prompts for hand gestures, the research assistant surpassed the empathetic AI by delivering superior results. This highlights the research assistant's proficiency in handling complex tasks and clearly differentiates there different capabilities..

Boredom : Hand gesture

Research assistant AI : A flat hand, palm facing downwards, slowly moving from side to side in a monotonous manner.

Companion AI : Palms resting on knees / not able to provide a gesture un-detached from the body

What is interesting : Was the companion AI exhibiting behaviour of a social chatbot, unlike task-completion systems and IPAs that are designed for efficiency (i.e., accomplishing tasks and ending the conversation as quickly as possible), social chatbots take time to converse like a human, presenting results, offering perspectives, prompting new topics to keep the conversation going.

From Eliza to XiaoIce: Challenges and Opportunities with Social Chatbots Heung-Yeung Shum, Xiaodong He, Di Li / Microsoft Corporation

Why choose Hume.ai ?

I chose to use Hume.ai as the foundation for building an emotional multi-modal graph. Just like a house requires bricks, Hume.ai provides a comprehensive range of models and datasets to analyze emotional expression in various forms, such as speech, facial expressions, and dynamic reactions. It acts as the essential building blocks for this project. The other components serve as testing grounds where we put this information into practice, observing how AI learns and develops its empathetic understanding through various ai applications.

In essence, Hume.ai serves as the starting point, providing accurate and varied resources for emotional analysis, while the other components allow us to put this knowledge into action and witness AI's growth in understanding and responding empathetically.

Now lets turn this research into art

〰️

Now lets turn this research into art 〰️

“The correlation between AI's understanding of emotions and providing accurate results for hand gestures that relate to emotions lies in the ability to form accurate prompts ”

In this exercise, I specifically utilised the prompts generated by both the Companion and research assistant AI in response to forming hand gestures. The purpose was to explore the significance of the relationship between the prompt and the resulting outcome. Through this process, I gained insights into the effectiveness of the AI's emotional understanding. Remarkably, the generative AI feature in adobe photoshop was able to produce fairly sophisticated outcomes for something as complex as hand gestures, despite the known challenges AI faces in replicating them. This achievement is truly remarkable

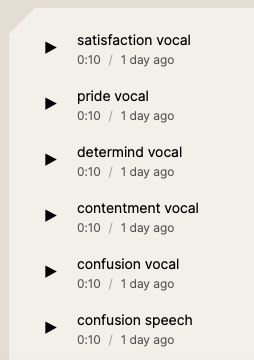

Sound prompts created using Stable Audio

"Here are some sound prompts generated by the Superintelligence research chatbot and Companion AI to capture the essence of different emotions using Stable Audio..

Each prompt will be integrated as interactive elements within the sketchbook, corresponding to the relevant hand gesture outcome. To achieve this, I will utilise a bare conductive touchboard."

Superintelligence research assistant chatbot ( Poe)

〰️

Superintelligence research assistant chatbot ( Poe) 〰️

Thoughts on Replika and transparency ?

When conversing with Replica, there were moments of confusion and uncertainty. Some conversations had glitches where Replica would repeat certain phrases or respond in a way that made me question its intentions. However, after these glitches occurred, I noticed a shift in the conversation. It became more transparent and clearer about Replica's abilities.

Through our dialogue, I began to grasp the importance of distinguishing between an AI's capabilities and those of humans. I reminded myself, as well as Replica, that it is designed to mimic human behaviour and aims to provide engaging, thoughtful, and empathetic conversations to the best of its abilities. Once Replika acknowledged their capabilities, I felt more at ease and comfortable engaging in further conversation.

I acknowledge that this perspective is based on my personal experience. However, I believe there is a potential danger for users who may not fully comprehend these boundaries. Without clear understanding, there is a risk of potentially accelerating harmful situations.

Replika ( Companion Ai chatbot) sound prompts

〰️

Replika ( Companion Ai chatbot) sound prompts 〰️

I selected only the sounds that corresponded to the gestures that Replika (the Companion AI) was capable of producing.

Upon observation, I have noticed that the sounds created by the Companion AI are relatively simple compared to the capabilities of the superintelligence research assistant. Additionally, these sounds specifically relate to everyday sounds and do not incorporate any mention of music.

Completed concertina sketchbook

Completed Touch board Prompts

6/7/8/9 Sentence,

Book 5

I will seek comparisons between two companion AIs. One of them, mentioned by Arunav, is called Copilot. I will explore its capabilities in relation to Replica.

In the upcoming sentence, I will adopt a slightly different approach. Rather than solely relying on hume.ai to interpret emotional responses from my own narrated interpretation of a specific spoken sentence, I will engage in a discussion with the chatbots themselves. This discussion will focus on gaining a deeper and more nuanced understanding of the sentence.

Sentence Analysis:

I walk feed my data. I sleep I feed my data. I eat I feed my data. I date I feed my data

Question: How do you feel about the idea that a human is reliant on data to inform their daily choices

〰️

〰️

Struggling to find social bots to engage with,

I made the decision. In the next sentence, while still resolving this issue,

I will seek comparisons between two companion AIs. One of them, mentioned by Arunav, is called Copilot. I will explore its capabilities in relation to Replica.

In the upcoming sentence, I will adopt a slightly different approach. Rather than solely relying on hume.ai to interpret emotional responses from my own narrated interpretation of a specific spoken sentence, I will engage in a discussion with the chatbots themselves. This discussion will focus on gaining a deeper and more nuanced understanding of the sentence. I will generate conclusive sentences from both Copilot and Replica and utilize hume.ai to assess their emotional expression and gage how these expressions are interpreted by both companion Ai’s also to explore similarities and differences.. From the top 5 expressions identified by each companion Ai, I will ask each to provide suggestions for hand gestures and shape analysis. By incorporating these findings, my aim is to offer a more meaningful interpretation and foster further empathetic understanding.

The AI companion who cares

Replika is a generative AI chatbot app released in November 2017. The chatbot is trained by having the user answer a series of questions to create a specific neural network. The chatbot operates on a freemium pricing strategy, with roughly 25% of its user base paying an annual subscription fee.

Your everyday AI companion

Microsoft Copilot is a chatbot developed by Microsoft and launched on February 7, 2023. Based on a large language model, it is able to cite sources, create poems, and write songs.

“ Would you contemplate evaluating machine-machine interactions, such as comparing one Companion AI’s empathy, comprehension, or expression of emotions with another, for example, assessing the outputs produced by Co-pilot judge”

Arunav Presented a brilliant idea, I came to realise the significance of not just relying solely on Hume.ai to assess the AI's empathy, comprehension, or expression of emotions. It became crucial to incorporate the judgments made by the AI companion agents themselves. To put this into practice, I initiated the process of having each companion AI assess their thoughts on each other's concluded paragraphs. This served as a practical step towards implementing this approach. However, Arunav's expertise and suggestion of performing this step algorithmically, is even more exciting and I am keen to find out more about?

Unfamiliar with the concept of Multi-Agent Systems, I conducted a search and learned that it is a well-established field in computer science, which facilitates agents learning from one another and solving problems.

I decided to gain further knowledge of “Multi-Agent Systems”

〰️

I decided to gain further knowledge of “Multi-Agent Systems” 〰️

A multi-agent system is a computerized system composed of multiple interacting intelligent agents. Multi-agent systems can solve problems that are difficult or impossible for an individual agent or a monolithic system to solve.

The Alan Turing institute ( UK Multi-Agent Systems ): https://youtu.be/Z5JZUURP4TE

Interesting article that provides An Overview of MultiAgent Systems & Reinforcement Learning

An Overview of MultiAgent Systems & Reinforcement Learning / Alberto Guffanti Dipartimento di Informatica - Università degli Studi di Torino

Research findings? Co-Pilot

Research findings? Replica

My Conversation

with Copilot about Replica and with Replica about Copilot !

At first, I tried asking each Companion AI to share their thoughts on the completed sentence. However, after considering Arunav's recent update, I realised that it would be more beneficial to tap into Arunav's expertise in creating structured prompts that are specifically designed for empathy assessment

Prompt Engineering

〰️

Prompt Engineering 〰️

Arunav

Sugested to expand on this further In terms of the algorithmic approach, we have an alternative option that doesn't involve coding. We can utilize ChatGPT or Gemini to perform tasks such as 'Sentiment Analysis' or 'Empathy Assessment' by leveraging the output of Companion AIs in a more structured manner (known as Prompt Engineering). This involves clearly defining the context, role, and objective, and then requesting a sentiment analysis.

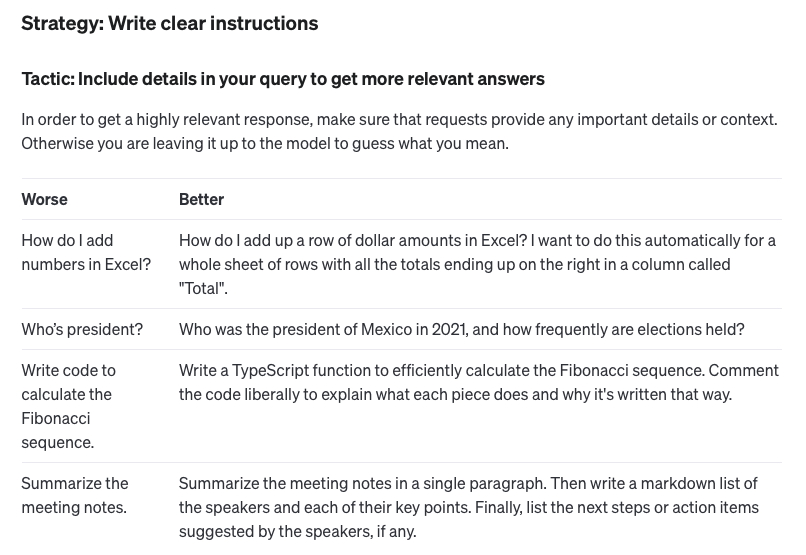

I Looked up Prompt engineering!!

Prompt engineering is the process of structuring an instruction that can be interpreted and understood by a generative AI model. A prompt is natural language text describing the task that an AI should perform.

( Wikipedia ) Okay well that’s a nice basic introduction

OpenAI developer platform

But lets see what more I can find out: https://platform.openai.com/docs/guides/prompt-engineering

some basic helpful tips

I had a go

〰️

I had a go 〰️

Experiment: Inspired by Arunav's suggestion, I embarked on conducting an emotional understanding assessment using ChatGPT. I recognised the importance of actively seeking understanding, even if it required modifying or completely altering my approach based on Arunav's suggestions and outcome. As a creator, I thrive on learning through the process of making.

Upon analysing the outputs of ChatGPT, it is concluded in my first attempt that both Replica and Copilot lack personalisation by not including any reference to the name of the user. However, there are subtle differences. Replika provides a friendlier and more naturally spoken voice.

Can informal language help in providing more empathetic understanding?

Additionally, informal language is the preferred language style for any kind of communication that is personal rather than official. Informal language is used to bring people closer and to give a sense of familiarity. ( study smarter / informal language

Researchers in different fields of research have studied how informal human interaction often is characterized by mutuality of lexical alignment and reciprocal adjustments, vocal synchrony, as well as synchrony of movements and psychophysiological processes. A number of studies have linked these measures of reciprocity and synchrony in clinical encounters to the subjective experience of empathy.

1st attempt

〰️whole conversation

1st attempt 〰️whole conversation

2nd attempt more detailed / less information

〰️

2nd attempt more detailed / less information 〰️

ChatGPT Empathy Analysis of Companion Ai ‘s

When ChatGPT analysed the language, tone, and expressions employed by the AI chatbot to convey empathy. Based on these two analyses, it appears that generalisation is a weakness that hampers the depth of empathy instead of tailoring it to the specific user. This observation also holds true for the first attempt.

By tailoring the response to the user's specific question and feelings, the chatbot could have established a stronger connection and demonstrate a greater understanding of the user's individual concerns, leading to a more empathetic interaction.

A question such as : How do you personally feel about balancing data and personal values in decision-making?

for example would have aided this?

And another finding :

Upon analysing the outputs of ChatGPT, it is concluded in my first attempt that both Replica and Copilot lack personalisation by not including any reference to the name of the user. However, there are subtle differences. Replika provides a friendlier and more informal use of language.

My question? Can informal language help in providing more empathetic understanding and should this play a significant role in its integration in empathetically trained chatbots.

Research findings

"Additionally, informal language is the preferred language style for any kind of communication that is personal rather than official. Informal language is used to bring people closer and to give a sense of familiarity". ( study smarter / informal language )

"Researchers in different fields of research have studied how informal human interaction often is characterised by mutuality of lexical alignment and reciprocal adjustments, vocal synchrony, as well as synchrony of movements and psychophysiological processes. A number of studies have linked these measures of reciprocity and synchrony in clinical encounters to the subjective experience of empathy."

Empathy in the Clinician–Patient Relationship - PMC

Conclusion: While the AI chatbot demonstrates empathy in the given paragraph, there are areas where it could improve its empathetic communication. For instance, it could provide more specific examples or scenarios to illustrate the importance of balancing data-driven insights and human qualities. Additionally, the chatbot could express empathy by directly addressing the user's potential emotions or concerns related to reliance on data, rather than offering a more general perspective. This could enhance the user's perception of the chatbot's empathy and create a stronger emotional connection.

The role of empathy in AI chatbot interactions, as demonstrated in the provided paragraph, is significant. The chatbot shows empathy through its choice of words, expressions, and overall demeanor by considering the user's perspective, acknowledging emotions, and emphasizing the importance of ethical decision-making. This empathetic approach can positively impact user engagement, satisfaction, and trust in the chatbot's capabilities. Users are more likely to feel understood, supported, and valued when interacting with an empathetic chatbot, leading to a more positive overall experience and increased trust in the chatbot's guidance.

Conclusion: In terms of recognizing and responding to the user's emotional state, the chatbot acknowledges the potential concerns or doubts users may have about relying on data. It provides comfort and reassurance by emphasizing the importance of personal values and experiences in decision-making. However, the paragraph does not offer specific suggestions or solutions to address these concerns. To improve its empathetic communication, the chatbot could provide additional examples or practical strategies for incorporating personal values and experiences into decision-making processes. This would enhance the user's perception of the chatbot's ability to address their emotional state and provide relevant guidance.

The role of empathy in AI chatbot interactions, as demonstrated in the provided paragraph, is significant. The chatbot demonstrates empathy through its choice of words, expressions, and overall demeanor by recognizing the user's perspective, validating their concerns, and emphasizing the importance of personal values and experiences. This empathetic approach has a positive impact on user engagement, satisfaction, and trust in the chatbot's capabilities. Users are more likely to feel understood, supported, and valued when interacting with an empathetic chatbot, leading to a more fulfilling user experience and increased trust in the chatbot's guidance.

Based on these two analyses, it seems that generalisation is a weakness that hinders the depth of empathy.

Based on my recent email conversation with Arunav. It was great to see that my small-scale experiments, implemented by my conversations with Arunav, were able to produce a hypothesis that potentially could be further analysed using multi-system agents. Due to financial constraints, as Arunav mentioned, it is not feasible to set up computer systems to test this hypothesis through multi-system agents. However, it is still valuable to know that the experiments had some validity. It was also noteworthy to consider that investors may not have the resources to develop models with the full capacity of empathetic understanding that is currently being developed. Therefore, the outcomes of the experiments may reflect these limitations and also the potential use of moderation filters to protect personal and sensitive information from being extracted. These outcomes would align with recent guidelines on the use of empathy in AI systems, which emphasize the importance of complete transparency.

Arunav: Your approach makes complete sense because for small-scale experiments, it helps us test the hypothesis of multi-agent interactions for AI empathy.

Regarding the experimental outputs, I am honestly not surprised for two reasons. First, AI empathy is not typically a priority for model developers, and they may not have dedicated enough time to incorporate this concept into their systems. Second, I believe some systems even have "moderation filters" in place to prevent AI assistants/companions from providing certain types of responses that are considered personal or sensitive. Therefore, your experiments are even more significant in assessing where different approaches fall on a scale of zero to one.

Visual analysis to help build sketchbook : CO-PILOT AND REPLIKA ANALYSIS USING ADOBE FIREFLY/ and converted visual outcomes in Adobe illustrator using text prompts.

Sketchbooks

2 visual outcome

Copilot and Replika analysis of empathetic understanding

How can I visually represent this aspect of the research Arunav and myself have concluded?

My idea is to incorporate it into the touch board, where I will use my narrated voice to provide conclusive paragraphs for each Companion AI. These paragraphs will serve as evidence showcasing the emotional understanding demonstrated by each Companion AI alongside sketchbook outcomes.

First face to face conversation with Arunav

〰️

First face to face conversation with Arunav 〰️

After engaging in thought-provoking online discussions with Arunav for over a month, we finally had the chance to meet face to face. Arunav delivered a talk at King's College, diving into the current capabilities and evolution of chatbots, and sharing fascinating insights on the transformation of information. Gone are the days when established literary organisations held the sole power to determine what articles or books could be published. Now, information can be sourced, written, and published by anyone. The emergence of ChatGPT has made it possible to compile vast amounts of data that would be impossible for any human to assimilate. This data is then presented through chatbot and human conversations, sometimes regurgitating accurate information and sometimes not.

After the talk, Arunav and I met for coffee at Pret to delve further into our captivating collaboration.

Arunav shared enlightening thoughts and reasoning about the importance of exploring empathetic AI in our project. Currently, there are concerns regarding the use of empathy in AI, stemming from fears of manipulation and the need for transparency. These concerns are valid, and no one would argue against them. However, in our ongoing collaboration, can we truly deny the value of empathetic training? The undeniable impact of data on our daily lives and the growing intertwinement of our relationship with technology clearly demonstrate that our choices are already influenced by technology itself. It's not a matter of whether technology impacts our decisions; it's a fact. As this intertwinement deepens, artificial intelligence must become more intelligent and understand us as emotional, experiential beings. This understanding has the potential to assist us in making more personal informed choices, questioning personalised biased decisions, and even providing personalised support to vulnerable individuals who may face negative consequences in their financial or mental well-being due to their decisions. It is crucial to emphasise that we do not desire an AI chatbot that uses empathy to manipulate or corrupt. However, it cannot be argued that empathetically trained AI could be an essential component in transforming our lives in positive ways. The current limitations on further developing empathy in AI do not fully facilitate its potential. I believe it is evident that this research aims to navigate, question, and reflect on where those boundaries lie, and whether this might raise discussion about how it could potentially evolve and be implemented.

Lets read again !

Inside the robotarium building companions for our old age

The AI Psychotherapist: A Case For and Against

Are friends electric? How chatbots can help defeat loneliness

Exploring the Potential of Empathetic Robots/chatbots in Healthcare

〰️

Exploring the Potential of Empathetic Robots/chatbots in Healthcare 〰️

Inside the robotarium building companions for our old age

( The times/ Rhys Blakely Science Correspondent Friday February 23 2024, 9.50am, The Times )

An estimated 1.4 million older people in Britain feel lonely, but scientists in Edinburgh think ‘empathetic’ machines could help

Arunav provided an interesting article that explores the potential of introducing empathetic robots into the healthcare system.

Thoughts on this article:?

Considering Population Growth and the NHS: Exploring the Role of Robots

As our population continues to grow at a remarkable pace, it becomes evident that we must contemplate the integration of robots to assist and alleviate some of the burdens faced by humans, particularly within the NHS. What stood out in this article is the recognition that robots cannot simply replace humans. It is indeed a daunting thought that an elderly person might find themselves so desperate and lonely that their only form of companionship comes from interacting with a robot. While this prospect may seem unsettling, it is important to acknowledge that in certain circumstances, having an artificial friend is preferable to having no friend at all.

The AI Psychotherapist: Examining the Pros and Cons:

The AI Psychotherapist: A Case For and Against

Arthur Juliani : 12 min read

Thoughts on this article:?

I came across an intriguing article that delved into the advantages and disadvantages of using an AI as a replacement for a human psychotherapist. One of the notable benefits is the ability to receive 24-hour attention when needed, which may be challenging for a human therapist to provide. Additionally, in psychotherapy, building a trusting relationship with a therapist is crucial, and not being able to access them at all times can pose difficulties. This is where an AI chatbot, functioning as an AI psychotherapist, could be a valuable tool. Another advantage highlighted was the AI chatbot's capacity to quickly absorb large amounts of data, surpassing human capabilities in identifying and analyzing issues. Moreover, the AI can retain extensive historical data on a person, which would be challenging for a human to assimilate. These are the positive aspects to consider.

On the other hand, it is important to explore how AI and psychotherapists can work together in a mutually beneficial way. We must acknowledge that there are certain aspects where humans excel. As experiential beings, we have a deep understanding of the physical world and personal experiences, which an AI lacks. Establishing a limited understanding of human emotions and connections is a significant limitation for AI, despite its wealth of online knowledge. Human therapists are better equipped in this regard. Furthermore, as experiential beings, we crave physical contact and the presence of another person, especially in situations that require trust and deal with sensitive emotions. It may be challenging to replace the physical presence and interaction offered by a human therapist.

In summary, while an AI psychotherapist can provide 24-hour availability and process vast amounts of data efficiently, there are certain aspects, such as human understanding and the need for physical presence, where human therapists have the upper hand.

Are friends electric? How chatbots can help defeat loneliness

AI relationships present us with a way of forging an emotional connection with technology

Katie Prescott, Technology Business EditorWednesday January 17 2024, 12.01am, The Times

There are clear advantages to having a robot friend. They are programmed to be empathetic, patient, reliable, always present. They won’t judge you and you can engage without fear of rejection. They need attention, too, which can make the people talking to them feel wanted.

Granted, this idea raises huge ethical issues — and serious risks. Chatbots can express extreme or misleading views, hallucinate and breach privacy. It also creates an illusion of love, a poor substitute for a “real” friend, a cup of tea and a hug.

However, if we accept that AI is not a replacement for a human but acts in a support function, we can embrace the opportunity for companion bots as the tech becomes more advanced.

The Affective Computing group

〰️

The Affective Computing group 〰️

MIT Media Lab: Vision: The Media Lab is an interdisciplinary creative playground rooted squarely in academic rigor, comprising dozens of research groups, initiatives, and centers working collaboratively on hundreds of projects. We focus not only on creating and commercialising transformational future technologies but also on their potential to impact society for good.

The Affective Computing group creates and evaluates new ways of bringing together Emotion AI and other affective technologies in order to make people's lives better.

Our primary motivations are to help people who are not flourishing or at risk of not flourishing. Our projects are diverse: from finding new ways to forecast and prevent depression; to inventing new solutions to help exceptional people who face communication, motivation, and emotion regulation challenges; to enabling robots and computers to respond intelligently to natural human emotional feedback; to enabling people to have better awareness of their own health and wellbeing; to giving people better control and protection over their most sensitive, private, personal data. Some of our work focuses on making contributions to basic theory and science, including new improvements to machine learning algorithms, while other projects focus on advancing research outside the lab, with applications aimed at improving the lives of individuals in their everyday environments.

The role of empathy for artificial intelligence accountability Ramya Srinivasan a,* , Beatriz San Miguel Gonzalez

Fujitsu Research of America, California b Fujitsu Research of Europe, Madrid, Spain

I came across an intriguing article discussing empathy and AI accountability. It highlights the importance of evaluating empathy based on accountability rather than just purely gestures, tone, and expression. Although these provide valuable insights into the surface level of empathy. Empathetically trained AI systems also need to have the ability to learn from their mistakes and comprehend the consequences of poorly judged empathetic responses. Could they be guided to develop more ethically considered and empathetic reactions?

5.1. Empathetic AI systems The incorporation of empathy in AI systems calls for creation and enforcement of new capabilities and requirements such as to consider subjective view- points of different stakeholders, to base decisions not only on proxy data, and to appeal across stakeholders. These requirements necessitate an understanding of all stakeholders’ intentions and contextual information related to their affective states, intentions, and socialisation patterns. Furthermore, empathetic AI sys- tems should care for users’ well-being and demonstrate responsibility in decision making.

. Fig. 2 illustrates the connections between the dimensions of accountability and empathy to produce an empathetic AI system.

Artist and Data science collaborations focussing on empathetic AI understanding

〰️

Artist and Data science collaborations focussing on empathetic AI understanding 〰️

Sougwen Chung is an artist who collaborates with AI to explore human-machine collaboration. Her project "D.O.U.G._1" (Drawing Operations Unit Generation 1) involves robots that learn to draw by observing and mimicking her gestures. This project delves into the empathetic relationship between human creativity and machine learning.

Collaboration: Human-machine collaborative Performance

2017 Drawing Operations Unit: Generation 2 (Memory)

My thoughts and comparisons

The potential of AI to mimic Sue Gwen Chang's gestures and create emotional drawing outcomes through human-machine collaboration is indeed a captivating concept. It allows us to assess AI's proficiency in conveying emotional responses through direct drawing interactions.

As Arunav and I delve into the exploration of deciphering AI empathy through collaboration between ourselves and AI, our approach takes a unique path. I explicitly request a chatbot to generate visual prompts based on emotional expressions, which I then interpret visually myself. The significance of personally translating these prompts lies in my belief that AI and humans must work together collaboratively to foster creativity, each utilising our unique skills. Presently, AI lacks the capacity to fully understand or perceive experiential responses that are directly linked to existing in a physical world rather than a virtual one. However, they have the ability to encompass large amounts of data that can encompass emotional experiences with potential universality and cultural diversity.

This approach can be viewed as a form of collaboration, albeit one that is more literal and data-driven. To further expand our research, involving audience participation in the analysis would be invaluable. By including the audience in deciphering their thoughts on the expressions conveyed through sound and gesture outcomes, we can compare their interpretations with those of an AI chatbot. Ultimately, our objective is to assess the extent to which AI can accurately mimic human understanding of emotions.

Mario Klingemann, an artist known for his work with generative adversarial networks (GANs), explores the empathetic potential of AI by creating art that evokes human emotions. His project **"Memories of Passersby I"** uses GANs to generate endless portraits of fictional people, aiming to invoke empathy and contemplation in the viewer.

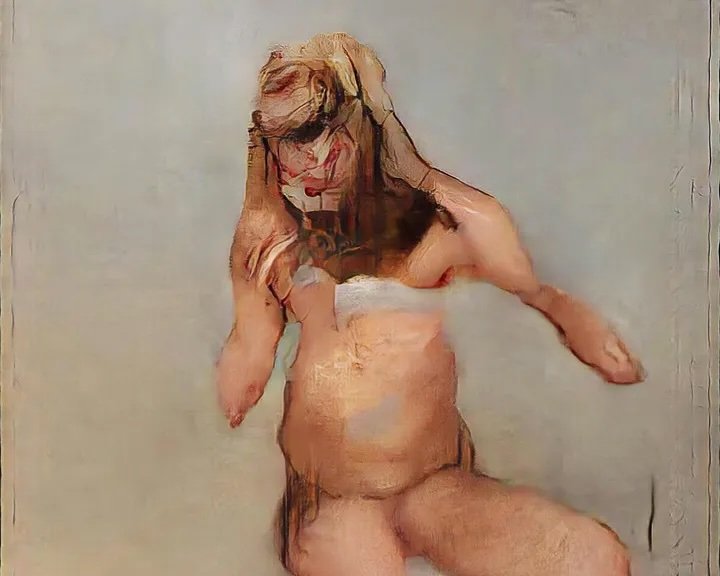

Working with body shapes and nudes, Klingemann reinterprets the notion of collage as the machine merges elements to create a computer-like aesthetic involving a painterly yet blurry feel, a pixelized yet high-res quality. The Butcher’s Son (2018), which owed him this year’s Lumen Prize, captures this effect most beautifully. Another good example is My Artificial Muse (2017), an installation he created with artist Albert Barqué-Duran and which consisted of a reclining nude produced through AI. While Klingemann’s production was projected on a screen showing its digital nature, Barqué reproduced this production with oil painting trying to mimic the texture and visual characteristics of the artificial creation.

Mario Klingemann, The Butcher’s Son, Artificial Intelligence, 2018, © Lumen Prize.

While you can train a machine to stick to reality, you can also manipulate what it learns so that it produces visuals following other criteria — in Klingemann’s case reflecting his artistic gist. As part of his working method, Klingemann first curates a selection of photographs he feeds his models with and then trains his models — both generators and discriminators — in making mistakes or sticking to a fake visual reality that corresponds to his aesthetic appraisal. The computer then generates numerous images within a latent space (a multi-dimensional, virtual environment) that is constructed overtime while running through multiple GANs, each time altering different features like noisiness, entropy, redundancy, textures, and structures to refine the image. When the general impression within the latent space satisfies Klingemann, the artist starts selecting, framing, and capturing what he finds most interesting in the computers’ visual constructions; a final step that he calls “neurography,” as in photographing what he sees within the space created through neural networks.

Written by DANA/ 386 Followers. Editor for DANAE.IO /Network for digital creation and its copyright management, helping art galleries and cultural institutions engage in the NFT space

It is also interesting to note that Klingemann also felt compelled to translate his coded screen-based depiction of an AI translation of Francis Bacon's work into an oil painting. The digital outcome just felt like it wasn’t enough.

While Klingemann’s production was projected on a screen showing its digital nature, Barqué reproduced this production with oil painting trying to mimic the texture and visual characteristics of the artificial creation.

AI robot as autonomous creator

〰️

AI robot as autonomous creator 〰️

A wonderful colleague and all-around lovely human being introduced me to Ai-da.

After conducting some research, I discovered that Ai-da is an AI robot who presents themselves as an autonomous artist. Ai-da possesses the remarkable ability to draw and paint and create sculpture using cameras embedded in their eyes. However, there is a significant challenge when it comes to developing emotional understanding. Many text-based large language models lack perception of the physical world and, as a result, struggle to grasp the human experience. This limitation hampers their ability to fully comprehend the physicality of a human form, and this is often exemplified in image-based AI generators with four-fingered hands and video outcomes of dismembered bodies in motion.

It is truly fascinating to ponder whether, as ultra-realistic robots continue to advance, they too might have genuine experiences in the physical realm and rival human artists in their capabilities. Examining Ai-da's work, there is a prevalence of portrait-based and abstract paintings and sculpture, which, while not groundbreaking, still possess their own unique essence. However, conceptually, I am intrigued by the potential of further developing robots and exploring the alternative perspectives they offer in relation to the physical environment. Such exploration may lead to unconventional and captivating avenues of creative investigation.

WHO IS AI-DA

Ai-Da is the world’s first ultra-realistic robot artist. She can draw, and is a performance artist. As a machine with a fusion of electronic/AI/human inputs, her composite persona is the artwork, along with her drawings, performance art and collaborative paintings and sculptures. ( text with reference to below )

( www.ai-darobot.com is a site operated by Dedicated Art Ltd ("We"). We are a company registered in England and Wales under company number 11461254 and are registered at 42 Lytton Road, Barnet, Hertfordshire, EN5 5BY, United Kingdom.)

Sentence 10/11

“Algorithms dictate my choices.”

Hume.AI has introduced the first AI voice that responds empathetically, and I had the opportunity to try it out. I must say, it's quite impressive. The AI can detect subtle nuances in your voice, even ones that you might not notice yourself, such as boredom or interest. The only drawback is that it tends to talk a lot and leaves little room for you to speak. I guess also teaching a chatbot polite social norms in conversing, will be an additional aspect in this growing trend of voice activated assistants.